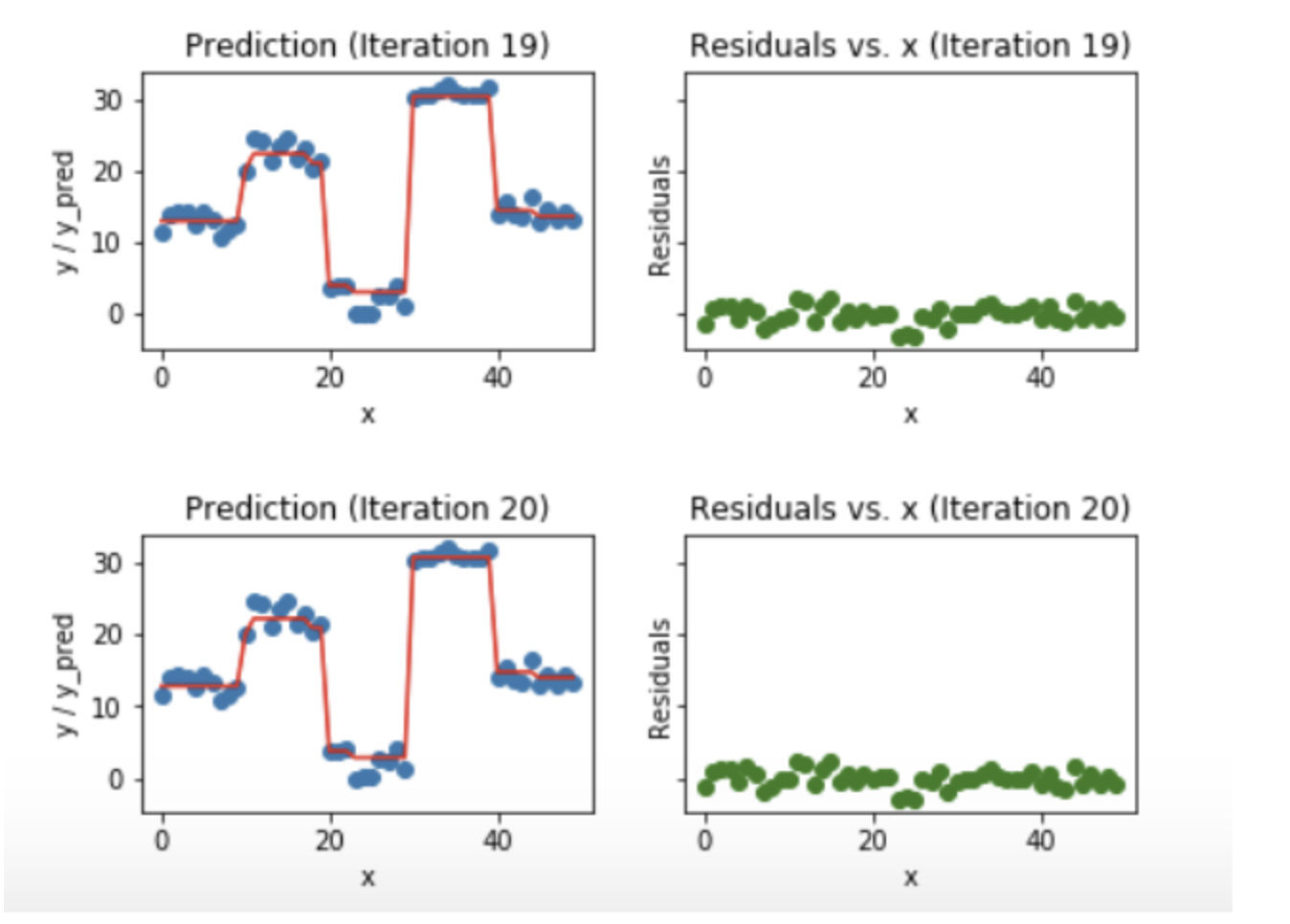

XGBoost solves the problem of overfitting by correcting complex models with regularization. Difference between Gradient Boosting and Adaptive BoostingAdaBoost.

Gradient Boosting And Xgboost Hackernoon

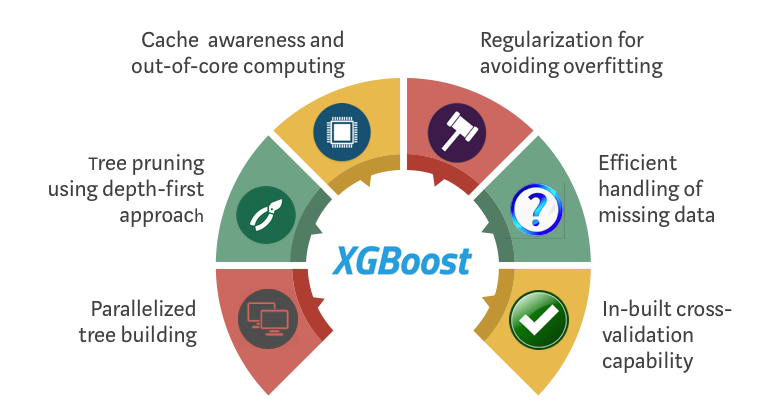

Boosting machine learning is a more advanced version of the gradient boosting method.

. Gradient boosting algorithms can be a Regressor predicting continuous target variables or a Classifier predicting categorical target variables. Under the hood gradient boosting is a greedy algorithm and can over-fit training datasets quickly. XGBoost is an implementation of gradient boosted decision trees designed for speed and performance.

Over the years gradient boosting has found applications across various technical fields. XGBoost stands for Extreme Gradient Boosting where the term Gradient Boosting originates from the paper Greedy Function Approximation. Before understanding the XGBoost we first need to understand the trees especially the decision tree.

It was introduced by Tianqi Chen and is currently a part of a wider toolkit by DMLC Distributed Machine Learning Community. Regularized Gradient Boosting is also an. XGBoost is basically designed to enhance the performance and speed of a.

Well use the breast_cancer-Dataset included in the sklearn dataset collection. Less correlation between classifier trees translates to better performance of the ensemble of classifiers. In this post you will discover the effect of the learning rate in gradient boosting and how to.

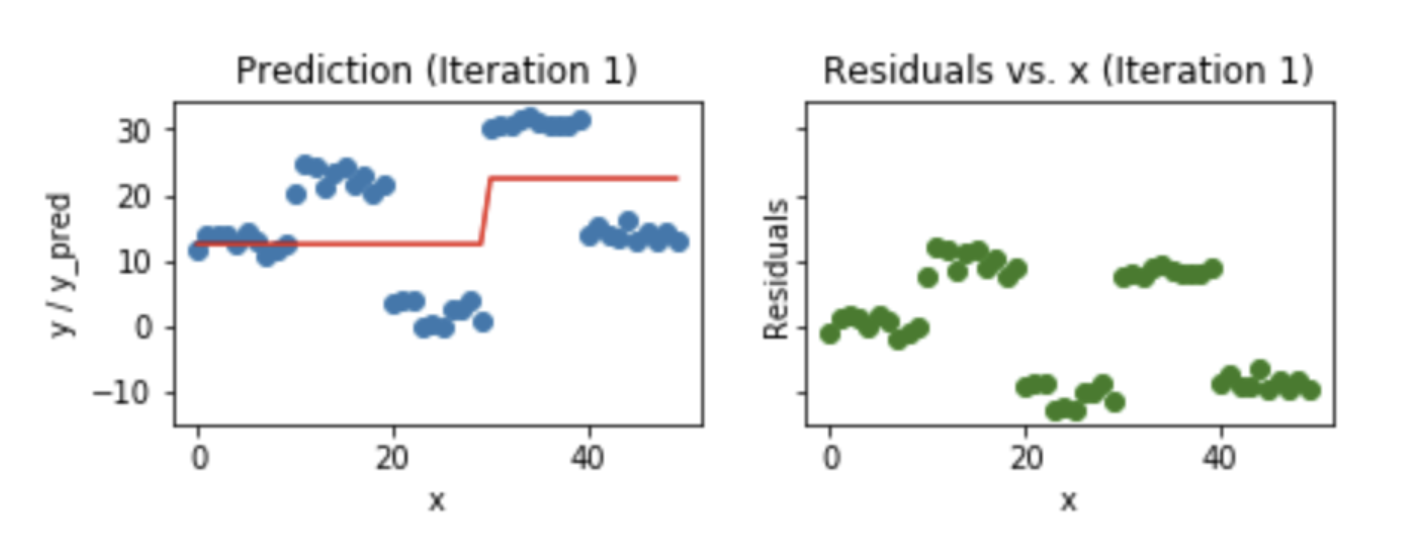

XGBoost also comes with an extra randomization parameter which reduces the correlation between the trees. AI manages the making of machines frameworks and different gadgets savvy by enabling them to think and do errands as all people. Gradient Boosting is an iterative functional gradient algorithm ie an algorithm which minimizes a loss function by iteratively choosing a function that points towards the negative gradient.

It is a library written in C which optimizes the training for Gradient Boosting. It is an efficient and scalable implementation of gradient boosting framework by friedman2000additive and friedman2001greedy. The gradient boosted trees has been around for a while and there are a lot of materials on the topic.

XGBoost is an algorithm that has recently been dominating applied machine learning and Kaggle competitions for structured or tabular data. A good understanding of gradient boosting will be beneficial as we progress. Gradient Boosting in Classification.

A Gradient Boosting Machine by Friedman. The three algorithms in scope CatBoost XGBoost and LightGBM are all variants of gradient boosting algorithms. AI manages more comprehensive issues of automating a system.

This computerization should be possible by utilizing any field such as image processing cognitive science neural systems machine learning etc. If it is set to 0 then there is no difference between the prediction results of gradient boosted trees and XGBoost. Extreme Gradient Boosting XGBoost XGBoost is one of the most popular variants of gradient boosting.

This tutorial will explain boosted trees in a self-contained and principled way using the. The code includes importing pandas as pd from xgboost import XGBClassifier from sklearn. Two solvers are included.

In practice there really is no drawback in using XGBoost over other boosting algorithms - in fact it usually shows the best performance. Given 30 different input. It is a decision-tree-based ensemble Machine Learning algorithm that uses a gradient boosting framework.

It therefore adds the methods to handle. In addition Chen Guestrin introduce shrinkage ie. XgBoost stands for Extreme Gradient Boosting which was proposed by the researchers at the University of Washington.

The purpose of this Vignette is to show you how to use XGBoost to build a model and make predictions. XGBoost eXtreme Gradient Boosting is a machine learning algorithm that focuses on computation speed and model performance. The algorithm can be used for both regression and classification tasks and has been designed to work with large.

This is a binary classification dataset. The main aim of this algorithm is to increase speed and to increase the efficiency of your competitions. To cater this there four enhancements to basic gradient boosting.

Enhancements to Basic Gradient Boosting. For XGboost some new terms are introduced ƛ - regularization parameter Ɣ - for auto tree pruning eta - how much model will converge. Until now it is the same as the gradient boosting technique.

A problem with gradient boosted decision trees is that they are quick to learn and overfit training data. Now calculate the similarity score Similarity ScoreSS SR 2 N ƛ Here SR is the sum of. Training a simple XGBoost classifier Lets first see how a simple XGBoost classifier can be trained.

Difference between different tree-based techniques. One effective way to slow down learning in the gradient boosting model is to use a learning rate also called shrinkage or eta in XGBoost documentation. XGBoost is short for eXtreme Gradient Boosting package.

The following code is for XGBOost. A learning rate and column subsampling randomly selecting a subset of features to this gradient tree boosting algorithm which allows further reduction of overfitting. Tree Constraints these includes number of trees tree depth number of nodes or number of leaves number of observations per split.

In this post you will discover XGBoost and get a gentle introduction to what is where it came from and how you.

Xgboost Algorithm Long May She Reign By Vishal Morde Towards Data Science

Gradient Boosting And Xgboost Note This Post Was Originally By Gabriel Tseng Medium

Gradient Boosting And Xgboost Hackernoon

Xgboost Extreme Gradient Boosting Xgboost By Pedro Meira Time To Work Medium

Xgboost Versus Random Forest This Article Explores The Superiority By Aman Gupta Geek Culture Medium

Deciding On How To Boost Your Decision Trees By Stephanie Bourdeau Medium

The Ultimate Guide To Adaboost Random Forests And Xgboost By Julia Nikulski Towards Data Science

The Intuition Behind Gradient Boosting Xgboost By Bobby Tan Liang Wei Towards Data Science

0 comments

Post a Comment